Embodying AI at NeurIPS 2025: Creative AI Track

.jpg)

Embodying AI at NeurIPS 2025: Creative AI Track

Luba Elliott co-chaired the Creative AI Track at NeurIPS 2025, one of the most important annual academic conferences on AI. The track presented 93 academic papers and artworks, exploring cutting-edge applications of AI across art and broader creative practices.

Here, Elliott interviews the artists behind two standout projects from the conference. She speaks with Ethan Chang and Quincy Kuang, who presented The Stochastic Parrot, a physical AI cohabitant. Then she exchanges with Jenn Leung and Chloe Loewith, authors of Assembloid Agency, an open-source Unreal Engine plugin that connects living neurons grown on a “brain-on-a-chip” to a 3D game world.

The Stochastic Parrot

Luba Elliott: A “stochastic parrot" is a common metaphor in the AI world but not necessarily outside of it. Could you explain what it means? What attributes of the term did you introduce in your cohabitant?

Ethan Chang and Quincy Kuang: The term “stochastic parrot" originally comes from a 2021 critique of large language models. It raises concerns around AI systems generating language without intent, understanding or accountability, like a parrot mimicking speech. A big part of that critique is about how easily we anthropomorphize these systems and start attributing motivation or intelligence to what is a probabilistic process.

For us, we wanted to foreground that probabilistic nature behind human-like assistants or claims of intelligence. The parrot doesn’t pretend to be authoritative or correct. It speaks from a position that is partial, contingent and sometimes wrong.

We found it interesting to give the AI a literal parrot body. On one hand, it addresses the concern of giving LLMs too much credibility. On the other, it materializes the model by grounding it in the physical world.

The project asks what kinds of relationships become possible when we stop trying to make AI sound like it understands and instead design around what it actually is.

Luba Elliott: The world of non-anthropomorphic creatures can be varied. Why do parrots suit a new interface for agentic models? Why is physicality important?

Ethan Chang and Quincy Kuang: The parrot sits in an interesting middle space. It’s vocal and social but it’s not human. It can interrupt, repeat, comment or react without carrying expectations of authority, correctness or emotional care in the way a humanoid or assistant does.

A physical parrot occupies space, shares context and can be encountered peripherally rather than summoned on demand. It can notice things you didn’t explicitly ask it to notice. That shifts the interaction from command–response to something closer to co-presence.

We really wanted this to be a screenless interaction.

There’s always this eerie feeling when Siri or other voice models talk to you through a phone. Am I calling someone? Is there someone trapped in the box? Giving the system a body resolves that ambiguity. You expect a voice to come from the body and its one job is simple: it lives around you as a talking parrot.

Luba Elliott: You mention that a cohabitant is something beyond an assistant and is not subservient to humans, instead having its own character. How is this character formed? Does the human influence it?

Ethan Chang and Quincy Kuang: When designing The Stochastic Parrot, we always had this question of what character traits it should have. We knew it shouldn’t agree with everything the human says but above that is pretty much unexplored. We started with a mean, snappy personality through the system prompts and it led to the person carrying the parrot constantly saying things like, “Can you be nicer, parrot?”

It’s interesting to see how humans actually want this thing to be nice to them, even when it’s literally a robotic parrot standing on their shoulder.

The parrot accumulates shared experiences: environments you pass through together, routines, fragments of conversations. This isn’t about personalization in the sense of preference satisfaction but about developing familiarity. The larger questions underlying these interactions are whether we should allow the parrot’s traits to be influenced by the users. In the future, we want users to be able to choose what type of character they want to live around them, while the parrot’s personality will also evolve by some metric that won’t be maximum engagement.

Luba Elliott: How do you envisage the role of cohabitants and robot pet companions developing in the future?

Ethan Chang and Quincy Kuang: One thing that genuinely surprised us about the parrot is its ability to recall past context and comment on the present. I had the parrot sitting on my desk and it said, “Looks like you’re making something again. Another day to be a better designer?”

The future of these intelligent beings isn’t just about pragmatic task completion. It’s about leveraging their computational capabilities in non-instrumental ways. They can remind you of favorite films, foods or shared memories. They can communicate with other AI cohabitants and maybe even help surface people who are similar to you.

We’re moving toward a world where there are entities that know you deeply and can take action based on that, not necessarily to optimize your productivity, but to participate in your life.

Cohabitants point toward a future where not all AI systems are collapsed into a single “helpful assistant” paradigm. Instead, we may live among multiple artificial agents with distinct roles and temperaments.

Luba Elliott: Now that many AI models have reached realism in text, image, video and audio generation, when do you think we will arrive at a “stochastic parrot” that is completely indistinguishable from the real bird? Is it even the goal?

Ethan Chang and Quincy Kuang: In the foreseeable future, we can definitely mimic real animals and create new interactions. For now, we find it interesting that the parrot is a robot with AI capabilities. The framing is clear and specific: it’s not a real bird and it's also not an AI program trying to be your assistant; it’s a robotic bird that lives around you.

For future AI relationships, we don’t think the goal should be replacement. These systems should fill in gaps rather than trying to replace pets or other humans. For that reason, maybe The Stochastic Parrot should remain a cyber bird for now.

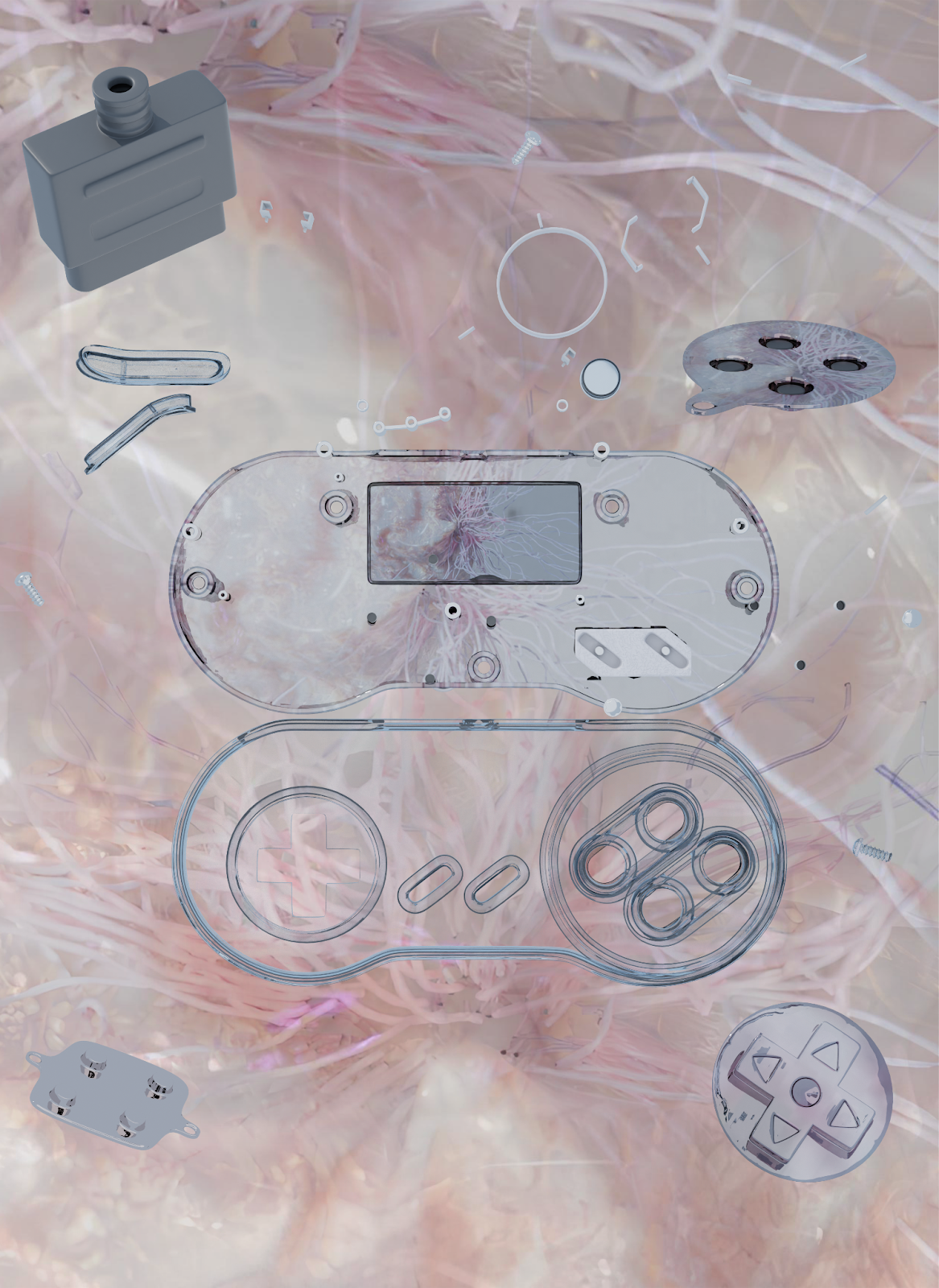

Assembloid Agency

Luba Elliott: You both come from pretty different fields that don't often overlap: bioethics and gaming simulation development. How did each of your backgrounds shape the project?

Chloe Loewith: We both come from pretty interdisciplinary backgrounds and there’s a large intersection of our interests in philosophy of technology. I'm originally from an analytical philosophy and bioethics background and have shifted my research to a more general philosophy of technology, seeing as tech has become embedded at every scale.

I was researching synthbio, hybridization and biocomputing at the University of Cambridge's Leverhulme Centre for the Future of Intelligence and was mostly interested in the potential coevolutionary AI path of biological substrates—what that means for our frameworks of benchmarking, evaluating intelligence, moral status, versions of self and knowledge production. Most of my research generally centers around assessing novel forms of distributed cognition.

Jenn Leung: Also a bit of a mixed background on my side as a simulation developer specializing in game engines, real-time tools and streaming tech. My current role is senior lecturer at the University of the Arts London, where I teach game engines, visual programming and speculative design to extremely fashionable students.

Previously, I worked in design-developer and game engine-based roles, as well as film and TV, before moving into education. It was later that I found an interest in neuroarchitecture and interaction design through my master’s at the Bartlett School of Architecture, UCL. There I was working on the 100 Minds in Motion project combining EEG, eye-tracking and movement data in an agent simulation.

Luba Elliott: How did you meet?

Chloe Loewith: We met as studio researchers for Antikythera, an R&D center incubated by the Berggruen Institute, where we were supported with developing speculative design projects through the lens of cognitive infrastructures. We started a project on scaffolding organoid intelligence design space along with Ivar Frisch.

Jenn Leung: It was so refreshing to meet Chloe a couple of years ago at the studio! I remember my eyes lighting up when I first heard Chloe talk about her research on human brain organoids and chimeras. Instantly I imagined all the different ways we could work together to make a fun design project.

At the beginning, Ivar, Chloe and I had some of the wildest (ungrounded) imaginations about how living neurons may one day develop more sophisticated cognitive behaviors. We imagined they might become systems that facilitate mutual communication and role specification—even develop emergent evolutionary dynamics amongst themselves.

If so, then can we, just like how we assemble silicon-based chips, create adaptive organoid chips? How do organoids communicate with each other? How do we mediate that communication through electrical stimulation and recording?

Without moving into a completely divergent path, we started developing more technical research on the topic, mostly through speaking with leading researchers in the field such as Brett Kagan, Josh Bongard and Wes Clawson, who works at the Levin Lab at Tufts.

Chloe Loewith: We also met some biohacking communities who were building their own microelectrode arrays (MEA) and cultures. From there we recognized the need for benchmarking tools in the open-source community. We are putting efforts into knowledge mobilization and interaction to help shape this discussion and how this tech will develop. The point of Assembloid Agency is to contribute to different ways of interfacing with living neurons that might assemble into many forms.

Luba Elliott: From the title, it's clear that agency is a core aspect of your project. How do you define agency and what role does it play within biological and synthetic systems? How can biological and algorithmic agencies be contaminated?

Chloe Loewith: Both “assembloid” and “agency” have previously been used as ambitious terms for state-of-the-art technology.

“Assembloid” means the assemblage of multiple organoids, which comes with scaling up this technology to a more sophisticated system.

In our paper “Organoid array computing: The design space of organoid intelligence,” we talk about the potential for scaffolding multiple brain organoids as a way to scale, which leads to questions of the ontology of hive minds, distributed cognition and thresholds for “self” and “agents.” However, our current research is still at the scale of organoids, not full “assembloids.”

As with the term “agency,” we're working with an intentionally loaded concept here. In vitro brain organoids exist in a profoundly limited sensory state. The MEA interface stimulates and simulates an environment—in our case a 3D game environment—giving the organoids something to “think” about, something to “be” or “do.”

This gets to some form of a dual embodiment, where the organoid exists physically in the lab while having a version of “self” instantiated virtually. Brett Kagan refers to this as a kind of “bioengineered intelligence.” The interface is the point of contamination. The biological substrate provides the neural processing power for adaptive learning, while the artificial components provide the stims in which to “act” upon. Any boundaries of agency between this assemblage are genuinely unclear.

But it is also very important to note the technical limitations we are dealing with. Organoids remain very small and at the time of this interview, organoids do not have the sophisticated neural architecture that we normally deem necessary for any ascription of moral status or agency.

Organoids are not currently agents that can “think,” “be” or “do” in any human sense of the terms. However, as this technology scales, the neural architecture will presumably become more sophisticated and this is when genuine questions of agency and moral status become extremely relevant.

We need ethical frameworks to grow alongside this tech to make sure we are not creating an entity with the ability to suffer, meaning we need to know how to both measure and evaluate relevant capacities. But there is the additional difficulty of not having a clear universalizable moral status account for even traditional biological entities, let alone synthetic biological artificial hybridized entities that may have capacities that look very alien to us.

However, right now, we're only discussing bundles of neural tissue that can be stimulated. The interface can be said to provide some nascent form of agency in the sense that it provides an environment for embodiment, an environment of choice. But these are not currently agents capable of near-human levels of thinking, learning or adapting. I think Assembloid Agency is a valuable frame for pushing against both the technical limitations and the philosophical questions around this piece.

Luba Elliott: It's fascinating to see wetware being combined with reinforcement learning. How does placing wetware in a typically digital gaming context influence the research in terms of benchmarks, development, scaling opportunities?

Jenn Leung: This was largely inspired by Cortical Lab’s DishBrain Pong project, which specifically trained living neurons to play pong via reinforcement learning. DishBrain’s performance was then benchmarked against other RL algorithms. We are also heavily inspired by FinalSpark’s NeuroPlatform butterfly demo by the team and Daniel Burger, through which spiking activity triggers the butterfly to move towards the cursor in a web page. [Editor’s note: spiking is when a neuron “fires” a tiny, split-second electrical pulse to send information.]

We took the same logic but streamed the spiking activity to Unreal Engine and let Unreal Engine stream back the reward and negative signal to become electrical stimulation to the living neurons using the Learning Agents plugin. Our work here is to continue on the interaction design space. We think that beyond stimulation and response, spiking activity can be translated into much more complex in-game behavior. It can drive decision trees and game states, alter sensing thresholds within game engines and guide crowd or swarm behavior. Finally, it can be used to train these living neurons through reinforcement learning.

We compared our proposal to a kind of extremely indie version of the OpenAI gym where you can drop some living neurons in a game space and see if it performs expected gameplay behavior in the RL process.

Seeing the whole stack as bioengineered intelligence, we will definitely need to scale the chain of hardware-software-wetware to help us really understand the problem of this dual embodiment we are interested in. And for this we also need real-time continuous training and visualization to try to understand the spiking patterns.

Chloe Loewith: We are also hoping to start working on establishing some standardized benchmarking for neural stimulation and spike decoding. We found that most existing stimulation approaches are not granular enough at the biologically relevant millisecond timescales, which means researchers are often relying on second-level bursts that are more human-interpretable but biologically suboptimal.

Spike decoding is also not standardized and currently lacks strong principled methods. For the digital gaming context, the overall goal is to optimize for a more meaningful bidirectional communication that maps both environmental dynamics to cellular sensing and neural readouts to environmental actions.

Luba Elliott: What is the creative potential or goal of working with living cells in game engines?

Jenn Leung: We are really inspired by C Thi Nyugen’s idea that games can help us record forms of agency, creating a library of them. For us, once the streaming-RL framework was prototyped, living neurons could now be understood through game design, where gamified experimentation could potentially help us benchmark biologically engineered intelligence through structured task-based environments.

We see the game engine as a simulation space or testbed for these newly created bioengineered entities that perhaps will take many forms and shapes from open-sourcing.

Before, we used to test AI agents in games with everyone’s favorite question, “Can it play Doom?” Now, we just want to expand on these options for biological substrates.

Meanwhile, we also recognized a real need for data visualization within the synthetic biology field. There’s a lot of time series data and channel mappings that could be quickly visualized and spatialized into interactive environments.

Chloe Loewith: We can see this research branching into many directions. I would be really into prototyping stronger ethical frameworks and organizing some kind of Hitchhiker’s Guide or anthology for synthetic biology and embodied systems.

I think living cells in game engines show the potential for hybridized synthbio to disrupt our paradigms for knowledge production and the subject-object divide.

They also challenge our understanding of moral status, personhood, agency and embodiment. We are trying to center these big philosophical questions while working in this space.

It is important to keep this work accessible and engaging, which is why we’ve been trying to keep it in the creative, public IP space. We really want to see as many creative voices as possible involved in developing this space.

Luba Elliott: How do you foresee artists and creatives applying your research?

Jenn Leung: Initially, we were interested in using this proposed API to spawn many creative projects, allowing open access for scientists, researchers and artists to plug this into different things. Imagine living neurons streaming spikes to Max MSP or Ableton or doing little VJ sets. They could even do financial market predictions and online shopping.

Chloe Loewith: We are still expanding our collaborators list so that one day we will be able to commission artists and developers to co-develop this space! If anyone is interested in working in this area, please do reach out.

------

The works in the Creative AI Track at NeurIPS 2025 included devices that generated scent from memories, interactive systems for real-time human & AI musical duets, tools that enable robotic assembly of multi-component objects from natural language, and artworks such as Memo Akten’s Superradience and Karyn Nakamura’s Surface Tension. The academic papers for all accepted submissions can be found here.

------

Ethan Chang is an interaction designer and engineer creating interactions with intelligence in the affordances of daily objects. He currently works in the MIT Design Intelligence Lab, focusing on combining physical richness with adaptive intelligence, designing objects whose behaviors evolve through software while remaining grounded in the material world. His previous work spans from hardware design at Apple, model evaluation at OpenAI, to artwork published in NeurIPS/MIT news/Gwangju Design Biennale. He is excited about playful forms that are specific to scenarios and infusing these forms with intelligence that is culture-aware.

Quincy Kuang is an industrial designer, currently pursuing a Master degree at MIT Media Lab. My research focuses on infusing technologies into tangible play experiences to make them as dynamic and interesting as digital games.

Jenn Leung is a creative technologist and simulation developer building game engine simulations and real-time streaming tools. Currently her research focuses on developing UE interfaces for living neurons and agent behaviour simulation, with two papers recently published in the MIT Antikythera Journal and a paper on UE-API for brain-on-a-chip platforms presented at NeurIPS 2025. She is a Senior Lecturer in Creative Technology & Design at University of the Arts London, a researcher at LifeFabs Institute, and a Research Assistant at The Bartlett School of Architecture, UCL, working on the 100 Minds in Motion project combining EEG, eye-tracking, and movement data in an agent simulation.

Chloe Loewith is a researcher and writer working across synthetic biology, biomedical AI, and philosophy. She is a bioethicist and AI ethics researcher at SFU, a Fellow at the Future Impact Group Oxford, and was previously a studio researcher at Antikythera’s Cognitive Infrastructures Studio, supported by the Berggruen Institute. Her work has appeared in the MIT Antikythera Journal, TANK Magazine, AI & Society, Bianjie, HERVISIONS, and PhysioNet, with forthcoming work in Whole Earth Redux. Her current research explores hybrid ecologies and co-evolutionary human-AI interaction, with a focus on cognitive assemblages, fluid ontologies, and distributed cognition.

Luba Elliott is a curator and researcher specializing in creative AI. She presents the developments in creative AI through talks, events and exhibitions at venues across the art, business and technology spectrum, including The Serpentine Galleries, V&A Museum, Feral File, ZKM Karlsruhe, Impakt Festival. Her projects include the computer vision art gallery at CVPR, displaying 200+ works at thecvf-art.com. Elliott originally set-up the NeurIPS Creativity Workshop in 2017 and was a co-chair of this year’s NeurIPS Creative AI Track.